Today, I decided to measure input voltages that induce clipping in my operational DSET. I basically just put in place the appropriate load resistor, set the signal generator at a level in the middle of the bandpass and increased voltage until the sinewaves showed the first signs of clipping.

Channel A - < 80Hz = 5.3Vpp @50Hz into 1R; 4.9Vpp @ 20Hz into 1R; 4.8Vpp @18Hz into 1R

Channel B - 60Hz to 500Hz = 10V+ @100Hz into 15R. Could not clip this channel.

Channel C - Fullrange - 5.3V @ 1kHz into 15R. Channel D - 600Hz to 1000Hz = 3.85V @800Hz into 15R [This is a single stage SET and will be run well attenuated from the remainder of the channels] Channel E - 3.2kHz+ = 8.8V @5kHz into 15R with YO186 DHT tube in play Channel F - 10kHz+ = not tested, but should be honky dory because it is 10KHz+ only.

All good so far. The clipping limit for the amplifier seems to be set at about 5.3V for both Channels C and A. C is lower but that channel is attenuated in use so should not prove to be the limiter.

Channel A clips into 1ohm at 50Hz/5.3Vpp, 20Hz/4.9Vpp, 18Hz/4.8Vpp. My in-room response gets down to 18Hz and then falls away, so 18Hz is a decent target to shoot for, but I seriously doubt there will be any musical content in that 18-30Hz range at full volume, so nothing should be missed.

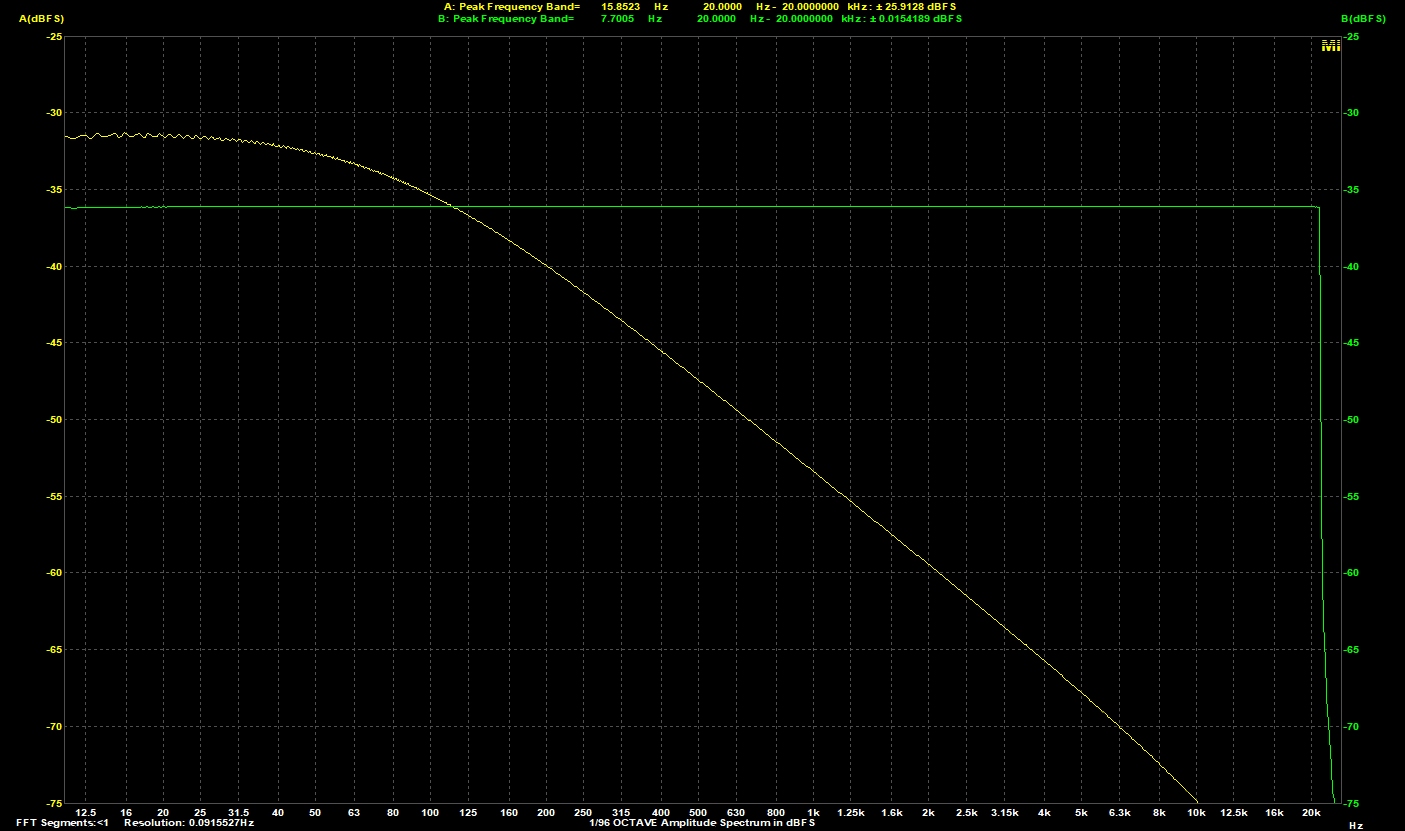

Here is the response at clipping for 18Hz which is 4.8Vpp (notice that the 80Hz first order low pass is active):

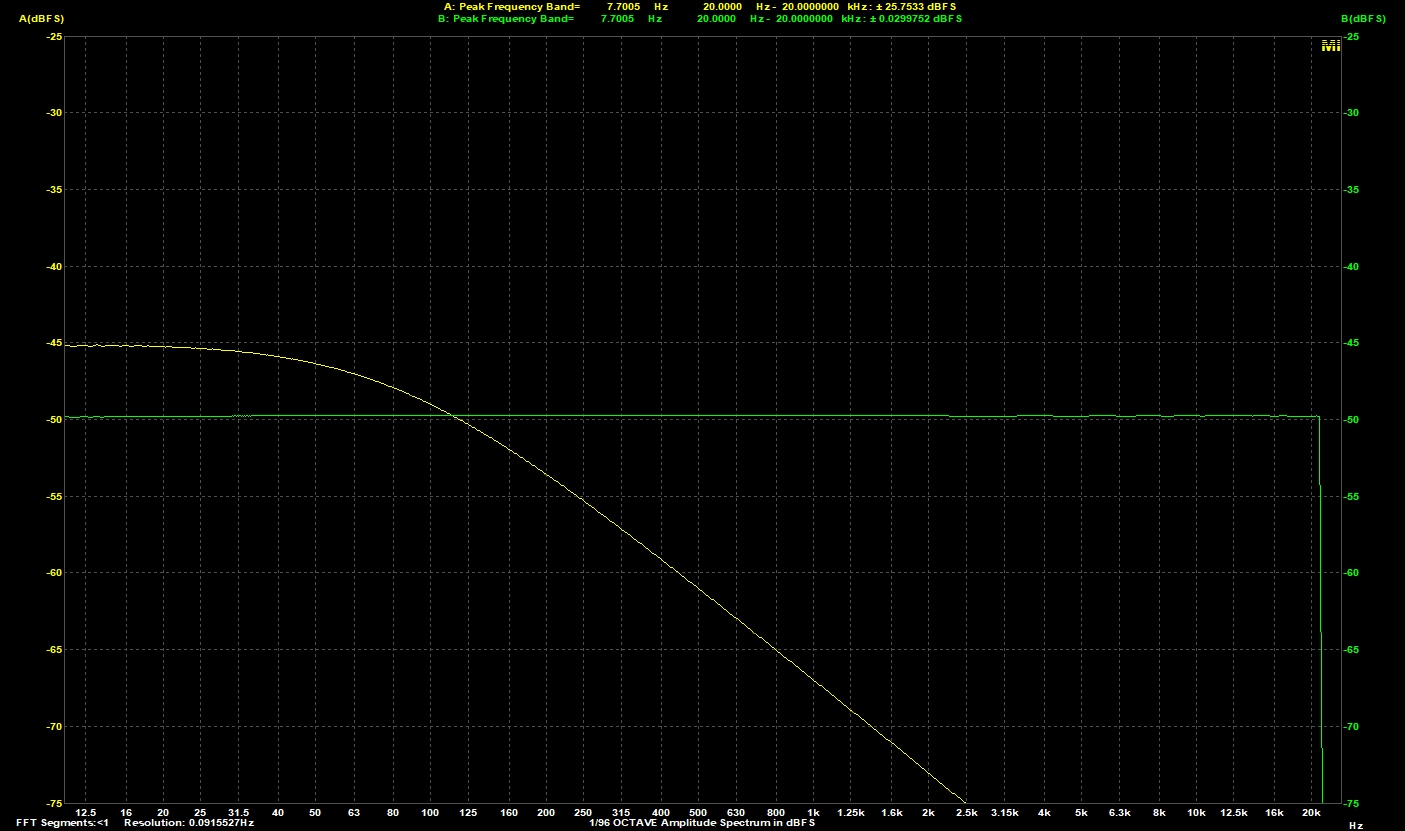

Likewise, this is for a more sedate 1Vpp into 1R:

|